LLMs are no longer just text predictors — they’re turning into reasoning engines. But while model performance improves, our ability to manage their context has lagged behind.

As we build richer GenAI systems — personalized shopping assistants, agentic workflows, retrieval-augmented copilots — we’re increasingly duct-taping memory, tools, user state, and business logic into brittle prompt strings. It works, until it doesn't.

Model Context Protocol (MCP) is a response to this architectural gap. It offers a way to define, structure, and operationalize context so that models reason more intelligently — and systems scale more reliably.

This isn’t just about prompt templates. It’s about a design philosophy that treats context as data, not prose.

In this article, I’ll break down:

What MCP is and what it includes

Why it matters for production-grade LLM systems

A step-by-step example of MCP in a GenAI e-commerce assistant

Ownership and design implications across PM, eng, and infra teams

When and how to introduce MCP in your own stack

Let’s get into it.

What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is a proposed standard way to define, share, and manage the context that LLMs use during inference.

It’s meant to solve a growing problem: As we build complex GenAI systems — think multi-agent workflows, RAG pipelines, or tools interacting with LLMs — we need a reliable and interpretable way to pass “context” into models.

If you’re new to all this terminology (or to get the most out of this write-up), be sure to read through some other deep dives on LLM Agents, RAG systems, and general LLM techniques.

Why is this important?

LLMs don’t work in isolation anymore. Modern AI products involve:

Tool use (e.g., APIs, search, plugins)

Memory (long-term, short-term)

Retrieval-augmented generation (RAG)

User state, preferences, history

Chain-of-thought reasoning

Multi-step agents

Each component needs clear, structured context to work well. But today, most developers just… shove it all into a prompt. MCP aims to bring order and clarity.

What counts as "context"?

In MCP, context is any information that guides the model’s behaviour, such as:

System instructions

Examples / few-shot prompts

Documents retrieved via RAG

Tools available to the model

User history or preferences

Session memory

What does MCP actually define?

It defines a structured format (usually JSON or similar) to organize these components. Think of it as a schema or protocol — like how HTTP structures web communication, MCP structures LLM interaction.

Here’s a simplified example:

{

"system_instruction": "You are a helpful personal shopping assistant.",

"user_goal": "Find waterproof sneakers under €150 in a minimalist style.",

"retrieved_documents": [...],

"tools": ["price_tracker", "style_matcher"],

"chat_history": [...],

"memory": {

"name": "Pranav",

"shoe_size": "EU 43",

"style": ["Minimalist", "Neutral colors"]

}

}Then, an MCP interpreter or router translates this context into a full prompt the LLM understands.

Who’s behind MCP?

It's being explored and developed by folks in the open-source AI infra community — particularly people behind projects like LangChain, LlamaIndex, and agent frameworks like AutoGen or CrewAI. It’s not yet a universal standard, but momentum is growing.

Why it matters for product builders (like you):

You can decouple logic from prompts — better modularity and reuse

Build multi-agent or tool-using systems more cleanly

Easier to debug and track what context influenced output

Better support for personalization, memory, and long-term interactions

Let’s walk through an end-to-end Model Context Protocol (MCP)-style flow in a GenAI-powered e-commerce assistant. Think of it as a personal shopping concierge that can:

Understand the customer’s preferences

Retrieve relevant product info

Use tools like price comparison or size guides

Maintain long-term memory for better recommendations over time

As with everything, examples best illustrate the concepts I’ve mentioned before. So let’s drill down with an e-commerce scenario.

Scenario: GenAI Shopping Assistant

User goal: “Find me stylish, waterproof sneakers under €150 for city use. I prefer neutral colors and minimalist design.”

Let’s break this into an MCP-style flow with structured context, tools, memory, and model behavior.

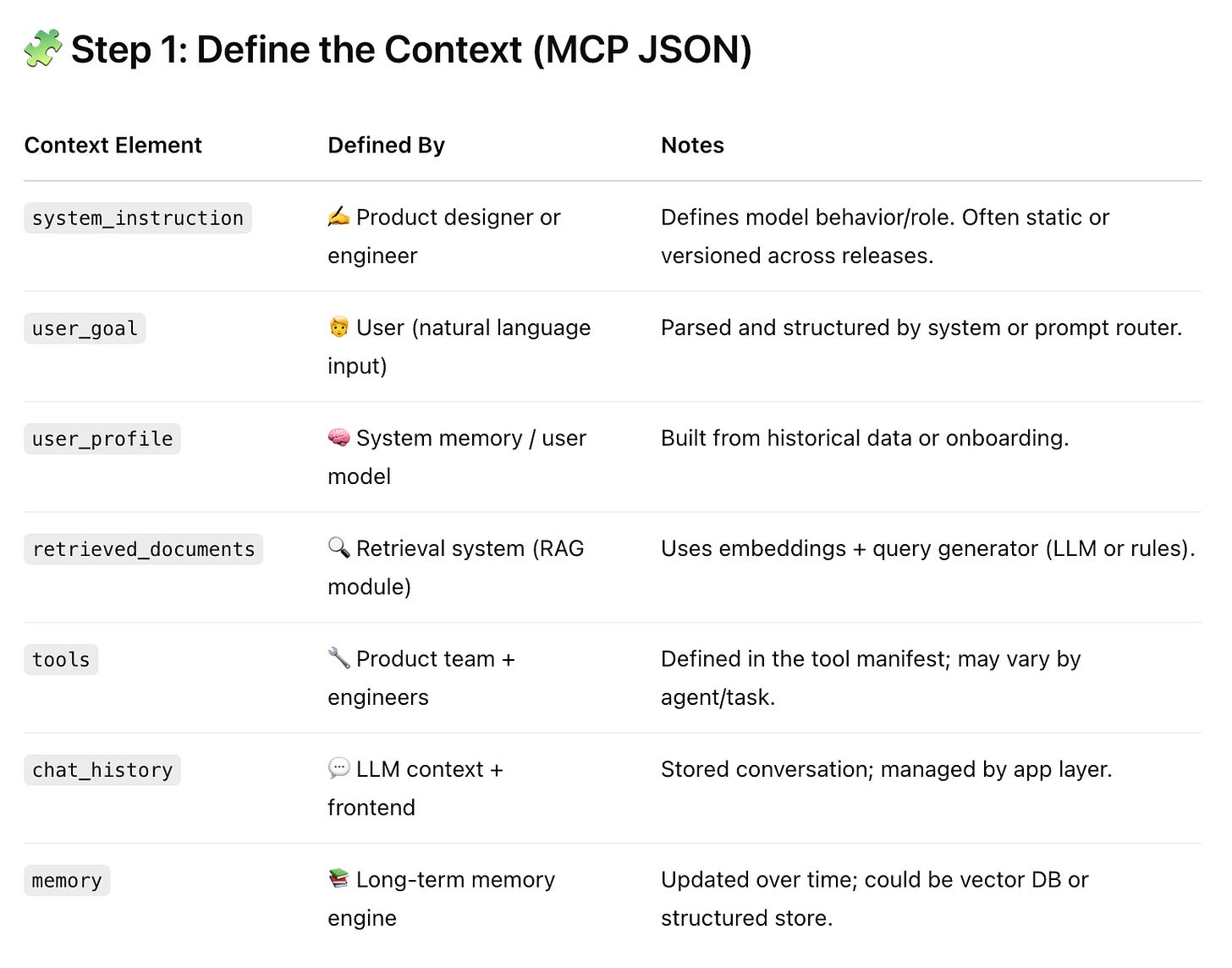

Step 1: Define the Context (MCP JSON)

{

"system_instruction": "You are a stylish personal shopping assistant. Always recommend relevant, high-quality, fashion-forward products.",

"user_goal": "Find stylish, waterproof sneakers under €150 for city use in neutral colors and minimalist design.",

"user_profile": {

"name": "Pranav",

"style": ["minimalist", "neutral", "luxury-but-practical"],

"shoe_size": "EU 43",

"past_purchases": ["Common Projects", "On Cloudnova", "Nike Air Max 1"],

"preferred_price_range": "100-200 EUR"

},

"retrieved_documents": [

{

"source": "ProductCatalog",

"query": "waterproof sneakers neutral minimalist under 150",

"results": [

{

"name": "Vessi Cityscape",

"price": "€135",

"color": "Slate Grey",

"features": ["Waterproof", "Lightweight", "Minimalist"],

"image_url": "...",

"product_link": "..."

},

{

"name": "Allbirds Mizzle",

"price": "€145",

"color": "Natural White",

"features": ["Water-resistant", "Wool blend", "Sustainable"],

"image_url": "...",

"product_link": "..."

}

]

}

],

"tools": [

{

"name": "PriceTracker",

"description": "Checks if the product is on sale",

"args": ["product_link"]

},

{

"name": "StyleComparer",

"description": "Compares visual style similarity to past purchases",

"args": ["image_url", "past_purchases"]

}

],

"chat_history": [

{

"role": "user",

"content": "I'm looking for shoes I can wear in Amsterdam — rainproof but still stylish."

},

{

"role": "assistant",

"content": "Got it! I’ll prioritize waterproof features with a clean aesthetic. Any preferred brands?"

}

],

"memory": {

"long_term": {

"Pranav_shoe_style": "modern minimalist, neutral colors",

"purchase_behavior": "Willing to pay premium for quality"

}

}

}

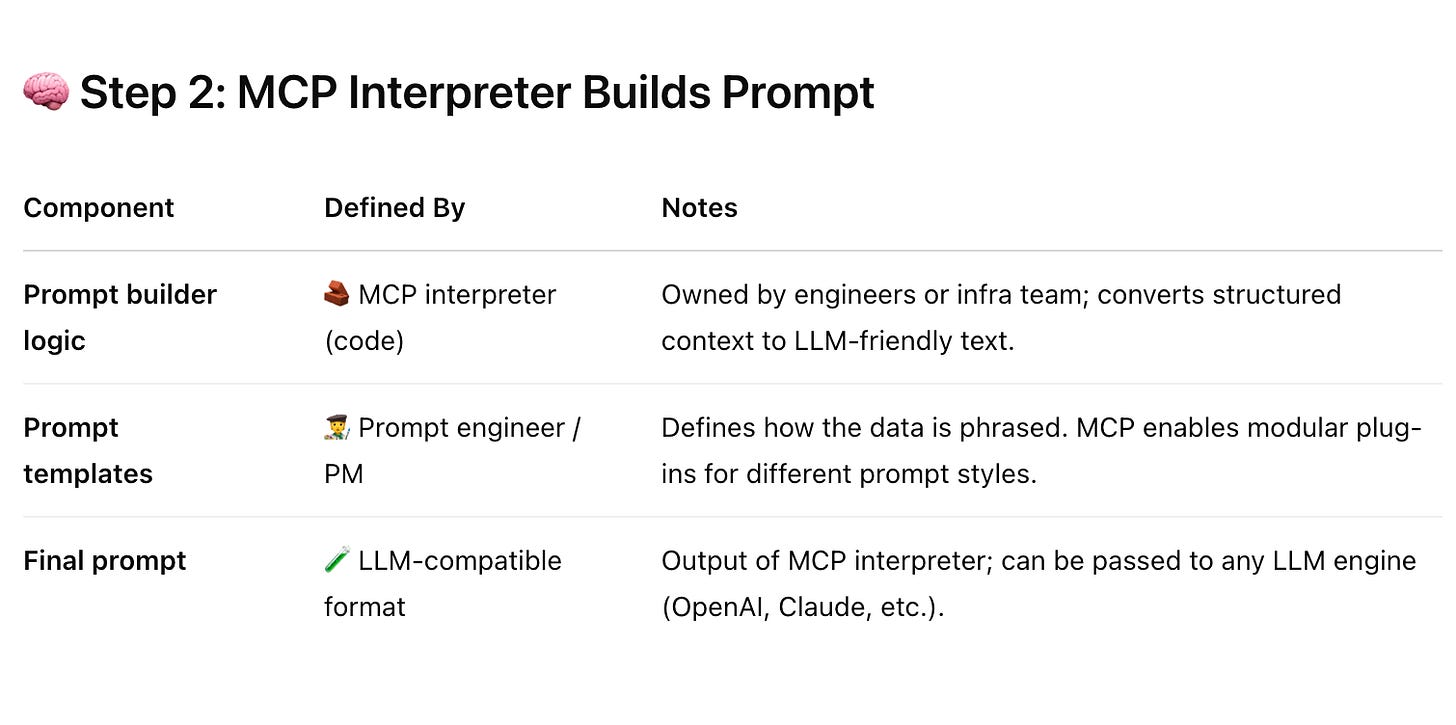

Step 2: MCP Interpreter Builds Prompt

The interpreter compiles all this into a prompt like:

System: You are a fashion-savvy personal shopping assistant helping Pranav find stylish waterproof sneakers.

User Goal: Pranav is looking for waterproof, minimalist sneakers in neutral colors under €150.

User Profile: Pranav wears EU 43, prefers neutral colors, and has previously bought Common Projects and On Cloudnova. He likes quality over hype.

Retrieved Products:

Vessi Cityscape: Waterproof, €135, Slate Grey, minimalist

Allbirds Mizzle: Water-resistant, €145, sustainable, Natural White

Use Tools: PriceTracker, StyleComparer

Based on the above, suggest the best options. Justify your picks with reasoning and style compatibility.

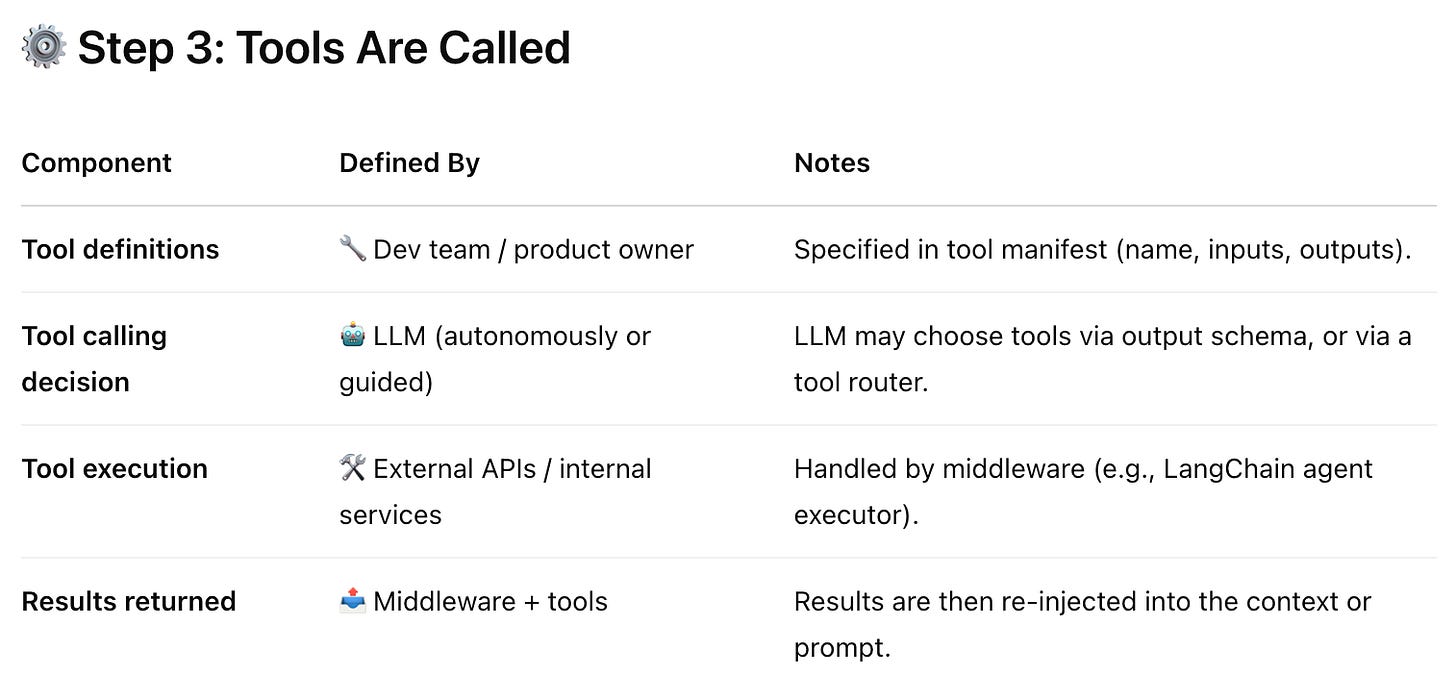

Step 3: Tools Are Called

Model suggests using tools:

Call

StyleComparerto compare product images to Pranav's past tasteUse

PriceTrackerto flag any products on sale

Example outputs from tools:

Vessi: 92% style match, price stable

Allbirds: 85% style match, currently 10% off

Step 4: Assistant Response (LLM Output)

“Based on your style and past purchases, I’d recommend the Vessi Cityscape (€135). It’s highly waterproof, clean in design, and very close to your previous aesthetic.

The Allbirds Mizzle is another solid choice — a bit more casual and sustainable, and currently 10% off. Want me to check sizing availability or delivery times?”

Step 5: Memory Updated

Long-term memory can be updated with:

"Pranav liked Vessi design."

"Responded positively to sale info."

"Might be open to sustainable materials."

Why this matters (and why MCP helps)

Structured, modular context → Better model reasoning

Tool-use integration → Richer assistant behavior

Memory-aware → More personalized long-term experiences

Easy to debug + maintain → Each part of the context is traceable

Actors and Actions in the MCP context

So MCP can be quite useful to log, keep track of, and manage large LLM systems. But who’s building what? Who has input in what part of the system? Let’s break down the ownership of each part of the flow: who defines what in an MCP-driven GenAI system, especially in an e-commerce context like your shopping assistant.

We'll break this into the main phases, then for each component, map out who is responsible:

MCP itself doesn’t define the values — it defines the structure and interfaces to handle these cleanly.

Who is MCP useful for, why, and when

MCP is especially useful for teams building complex LLM-powered systems — whether you're working on:

Personalized shopping assistants

Multi-step agent workflows

Retrieval-Augmented Generation (RAG) systems

Memory-aware conversational bots

LLM-powered tools and internal copilots

Why use MCP

MCP brings clarity, structure, and scalability to LLM interactions by separating out and formalizing all the "hidden" context behind model behavior — such as goals, memory, tools, history, and retrieved documents.

Instead of shoving everything into one monolithic prompt, MCP turns context into a modular, inspectable object. That means:

Easier debugging and observability

Better reuse across different agents and tasks

Cleaner personalization and memory updates

More consistent and maintainable prompt engineering

When to use MCP

You should reach for MCP when:

Your LLM use case is growing in complexity (e.g. agents, RAG, tools, long-term memory)

You're working in a production or multi-user environment

You want reliability and clarity in how context flows into your LLM

You're building a platform or shared infra where multiple apps or agents interact with models

What’s Next?

Model Context Protocol is still in its early days, but it’s quickly becoming essential infrastructure for building scalable, modular, and intelligent GenAI systems.

If you're working on:

A personalized shopping assistant

A multi-agent research tool

An LLM-powered internal copilot

Or anything that combines RAG, tools, and memory...

…consider adopting MCP principles now — even informally — to future-proof your architecture.

In upcoming posts, I’ll dive deeper into:

MCP templates and schema design

Live examples across e-commerce, HR tech, and SaaS copilots

Want a reusable MCP starter kit or LangChain-compatible boilerplate? Drop a comment or DM — I’m putting something together soon!

Thank a lot for sharing this port. I really like that you found a simple use case so that we can follow.

I'm interested in MCP starter kit! I would love to see if you could share your github link for this type of projects - I understand better when I run it and do some tweaks :D

Questions:

- I have been hesitant using MCP because it is built by anthropic and I saw some alternatives coming up. I'd prefer if these companies sit together and actually agree on a "protocol". What is your take on this? Would you advice your teams to start using this protocol?

- What is your take on knowledge graphs? In your example, you are only building the context from a single user but we know that it is common to get suggestions from people who have similar interests. Would you use knowledge graph to make these connections or ML models? When why? Maybe this is itself a post but I'm very confused about this data source and I appreciate if you share some thoughts.

Very impressive stuff. It was pretty helpful. Yes, in the detailed steps, I briefly skimmed. But, I think I am a bit better off after reading this one, than I was better reading this. Thanks for doing the hardwork.

I would have also loved the example being a hotel search and booking assistant over a shopping assistant ;). I am a loyal booking.com user and while making a booking a few weeks ago, totally felt the need and that the timing is here, that we should be getting to a point where I can search in long description - "I am a looking for highly kids friendly hotel, X* or above, that is also sea facing and is rated 8.5+ but also anywhere in the range of <Xkm> from <City> and ensure they are sorted by price and if there are any unique offers or stand outs, you can call them out to prioritize".. ;)